Big Data Hadoop Administrator Training Program Overview Aurora, CO

Your team depends on your big data platform for every critical insight, yet clusters often remain volatile and opaque. Issues like full disks, YARN resource deadlocks, and NameNode single points of failure disrupt business operations. Basic Linux administration skills are no longer sufficient; top companies in Aurora, CO tech hubs demand certified Big Data administrators who can design scalable, fault-tolerant, and secure Big Data infrastructure. Without the Administrator credential, resumes get filtered into the "System Admin" pile, missing high-paying Big Data Operations Lead and Data Architect roles. This is not a generic Hadoop or MapReduce course. Our program is crafted by veteran Data and Cloud Architects who have maintained multi-tenant, production-grade clusters across Aurora, CO IT giants and financial institutions. You'll master core administrator functions: capacity planning, resource isolation, cluster performance tuning, and securing distributed systems using Kerberos and other Big Data technologies. Learn practical skills that deliver immediate value: set YARN queue limits to prevent job-induced outages, perform rolling upgrades without downtime, and configure monitoring and auditing to meet compliance requirements. The certification is formal proof, but the real value lies in confidently presenting strategies to scale from 10 nodes to 100 nodes in live production environments. This program is designed for experienced Systems Administrators, Cloud Engineers, and Infrastructure Leads in Aurora, CO seeking rapid upskilling in Big Data operations. Benefit from hands-on cluster labs, live troubleshooting scenarios, and 24/7 expert guidance, ensuring you transition from reactive support to proactive cluster management. Build the skills to architect, secure, and scale Big Data systems, positioning yourself for premium Big Data engineer and administrator jobs.

Big Data Hadoop Administrator Training Course Highlights Aurora, CO

Deep Cluster Maintenance Labs

Gain mandatory hands-on experience in rolling upgrades, commissioning/decommissioning nodes, and file system check (fsck) for high-availability.

Mastering YARN Resource Management

Stop the resource contention chaos by learning to configure complex YARN schedulers (Capacity/Fair) and manage multi-tenant access.

Advanced Security Implementation

Dedicated modules on securing HDFS/YARN using Kerberos and implementing service-level authorization, a non-negotiable skill for production environments.

40+ Hours of Practical Administration Training

A focused curriculum designed to directly address the skills tested in top-tier vendor administration certification exams (e.g., Cloudera Administrator).

2000+ Scenario-Based Questions

Cut through generic knowledge checks. Our question bank tests your reaction to real-world production failure scenarios and critical configuration trade-offs.

24x7 Expert Guidance & Support

Get immediate, high-quality answers to complex configuration and troubleshooting issues from actively practicing senior Big Data Administrators.

Corporate Training

Ready to transform your team?

Get a custom quote for your organization's training needs.

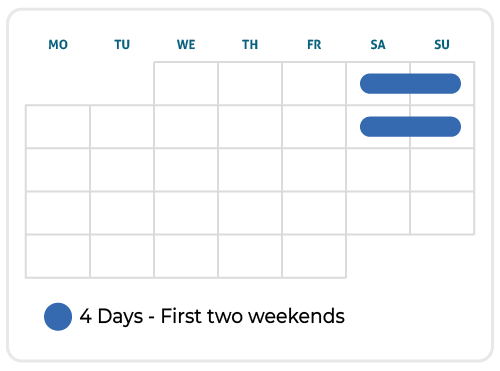

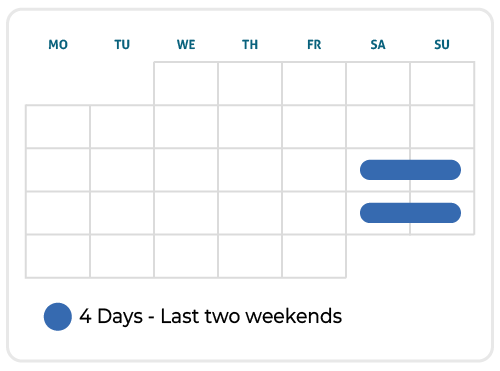

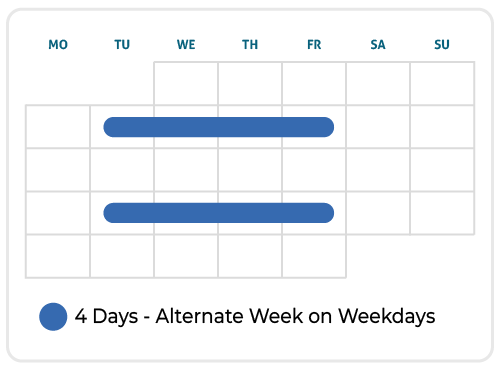

Upcoming Schedule

Skills You Will Gain In Our Big Data and Hadoop Training Program city83647

Cluster Capacity Planning

Stop the guesswork. You will learn to calculate optimal node counts, disk configurations, and memory allocation based on real workload patterns and budget constraints.

YARN Resource Optimization

Master the Capacity and Fair Schedulers. You will learn how to configure queues, preemption, and resource isolation to ensure multi-tenant stability and prevent resource starvation.

Hadoop Security Implementation (Kerberos)

Go beyond theory. You will implement the complex, yet critical, Kerberos security layer, configuring authentication for all services and ensuring a secure perimeter.

Fault Tolerance & HA Architecture

Guarantee uptime. You will deploy and manage NameNode High Availability, configure automatic failover using Zookeeper, and master critical backup and recovery procedures.

Monitoring & Diagnostics

Stop flying blind. You will integrate and interpret industry-standard monitoring tools (e.g., Ganglia, Grafana, custom scripts) to preemptively diagnose HDFS latency and YARN bottlenecks.

Data Ingestion Pipeline Setup

Architect for massive scale. You will learn to set up and configure robust, fault-tolerant data ingestion layers using tools like Flume, Kafka, and Sqoop to handle real-time and batch data loads.

Who This Program Is For

System Administrators (Linux/Windows)

IT Infrastructure Leads

Cloud Operations Engineers (DevOps)

Database Administrators (DBAs)

Big Data Support Engineers

Data Centre Architects

If you lead projects and meet PMI's mandatory experience requirements, this program is engineered to get you certified.

Big Data Hadoop Admin Certification Training Program Roadmap Aurora, CO

Why get Big Data Hadoop Admin-certified?

Stop getting filtered out by HR bots

Get the senior Data Operations and Infrastructure Architect interviews your current experience already deserves.

Unlock the higher salary bands and retention bonuses

Gain access to bonus structures that are reserved for certified experts who guarantee cluster stability and data security.

Transition from generic SysAdmin to Big Data Infrastructure Lead

Gain command over the enterprise data backbone.

Eligibility & Prerequisites

The administrator certification is for seasoned technical professionals. While official requirements vary by vendor (e.g., Cloudera, HDP), competence is universally mandatory:

Formal Training: Completion of 40+ hours of dedicated, hands-on Hadoop Administration training is a minimum expectation, fully satisfied by this program.

Linux/OS Expertise: Mandatory strong proficiency in Linux command line, scripting, networking, and system troubleshooting is assumed before enrollment.

Hands-on Cluster Experience: You must demonstrate practical, non-trivial experience in setting up, tuning, securing, and maintaining a multi-node Hadoop/YARN cluster. Our labs provide this rigorous exposure.

Course Modules & Curriculum

Lesson 1: Hadoop Cluster Maintenance and Administration

Master essential admin tasks: commissioning and decommissioning nodes, performing rolling upgrades, file system checks (fsck), and managing NameNode metadata.

Lesson 2: Hadoop Computational Frameworks & Scheduling

An administrator's view of MapReduce and Spark. Deep dive into YARN (Yet Another Resource Negotiator) architecture - ResourceManager, NodeManager, and ApplicationMaster.

Lesson 3: Scheduling: Managing Resources and Isolation

Master the Capacity Scheduler and Fair Scheduler. Learn to configure resource queues, preemption, and resource isolation to prevent critical jobs from failing in a multi-tenant environment.

Lesson 1: Hadoop Cluster Planning

Move beyond setup. Learn systematic capacity planning, hardware sizing, network considerations, and performance benchmarking based on expected workload.

Lesson 2: Data Ingestion in Hadoop Cluster

Setup and configure robust data ingestion tools. Master Flume for stream processing (logs) and Sqoop for relational database import/export.

Lesson 3: Hadoop Ecosystem Component Services

Understand the role and administrative configuration of vital ecosystem components: Zookeeper (coordination), Oozie (workflow scheduling), and Impala/Hive configuration settings for performance.

Lesson 1: Hadoop Security Core Concepts

Understand the fundamental security challenges in a distributed system. Deep dive into authentication, authorization, and encryption mechanisms within the Hadoop stack.

Lesson 2: Hadoop Security Implementation (Kerberos)

Mandatory hands-on implementation of Kerberos for cluster authentication, configuring principals, keytabs, and setting up secure client access.

Lesson 3: Auditing and Service-Level Authorization

Configure HDFS and YARN for detailed auditing. Implement service-level authorization (SLA) to restrict which users can run which types of applications and services.

Lesson 1: Hadoop Cluster Monitoring

Integrate monitoring tools (Ganglia/Prometheus/Grafana) to visualize key cluster metrics (CPU, disk I/O, YARN queue depth). Set up effective alerting.

Lesson 2: Hadoop Monitoring and Troubleshooting Scenarios

Dedicated lab time for troubleshooting common issues: NameNode failure, DataNode failures, network bottlenecks, YARN container errors, and configuration errors.

Lesson 3: High Availability and Disaster Recovery

Mastering NameNode High Availability (HA) using Quorum Journal Manager. Implementing backup, restoration, and disaster recovery strategies for your enterprise data.