Apache Kafka Certification Training Program Overview Plymouth, England

Your current systems are reactive - processing yesterday's data with hourly ETL jobs, daily batch cycles, and delayed responses to incidents. Meanwhile, enterprises in Hyderabad, Mumbai, and Plymouth, England rely on real-time data streams for instant payment processing, live fraud detection, and immediate user personalization. They need certified experts - Apache Kafka Architects and Engineers - who can design and manage these high-throughput systems. You're currently filtered out by recruiters searching for "Apache Kafka", "Confluent", "ZooKeeper", and Producer/Consumer APIs. Traditional integration skills are becoming legacy, while microservices and event-driven architecture dominate high-paying data engineering roles. This isn't just another Apache Kafka tutorial. Our Apache Kafka course is built by senior Data Streaming and Cloud Engineers who design real-world, high-volume pipelines for Plymouth, England Telecom and Fintech sectors. You'll master the non-negotiable architectural principles: partitioning strategies, replication factors, consumer groups, and throughput/failure trade-offs. Gain hands-on experience across the Apache Kafka ecosystem: Kafka Connect for seamless integration with external systems Kafka Streams for complex, in-flight data processing This Apache Kafka Certification ensures you can confidently design fault-tolerant, multi-AZ streaming pipelines capable of 100,000 messages per second, making you indispensable in real-time data environments. The program also equips you with the knowledge to navigate Apache Kafka documentation, recommended Apache Kafka books, and practical deployment scenarios, giving you the full toolkit to thrive in modern, event-driven architectures.

Apache Kafka Training Course Highlights Plymouth, England

Deep Dive into Broker Internals

Master log segments, index files, and critical configuration parameters that separate basic users from high-performance cluster administrators.

Producers & Consumers Code Mastery

Intensive hands-on labs focused on writing robust, optimized Producer and Consumer applications with guaranteed message delivery logic.

Advanced Partitioning Strategy

Learn to design topics with the correct key and partition structure to eliminate hot spots and guarantee high-volume, ordered message throughput.

Zookeeper & Controller Expertise

Gain deep knowledge of Zookeeper's non-negotiable role in cluster coordination, leader election, and metadata management for stability.

40+ Hours of Practical Cluster Labs

Intensive hands-on time dedicated to setting up, monitoring, load testing, and failure recovery on a multi-broker Kafka cluster.

24x7 Expert Guidance & Support

Get immediate, high-quality answers to complex API, configuration, and replication issues from actively practicing Kafka Streaming Engineers.

Corporate Training

Ready to transform your team?

Get a custom quote for your organization's training needs.

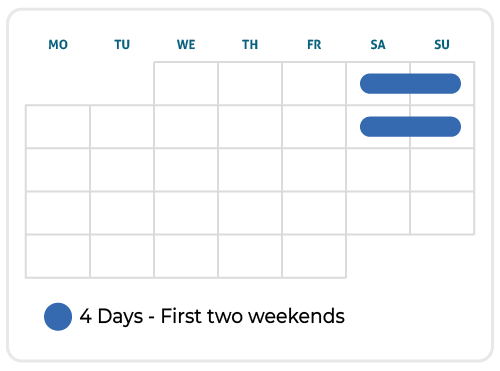

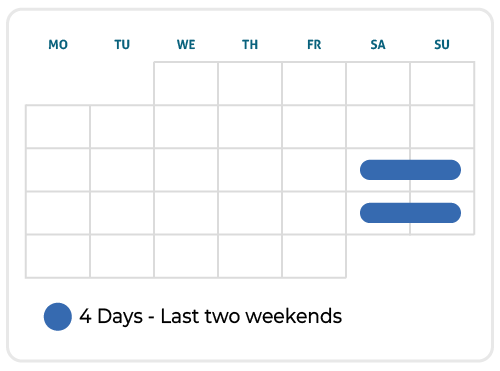

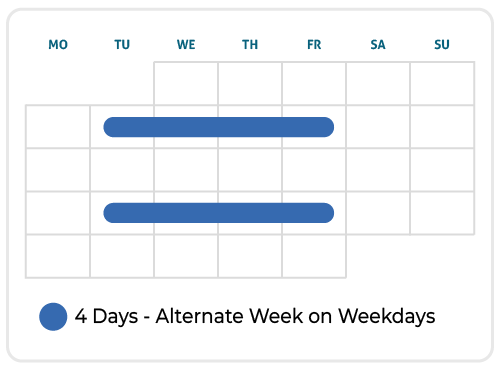

Upcoming Schedule

Skills You Will Gain In Our Apache Kafka Training Program city83647

Real-Time Architecture Design

Move past simple pub/sub. You will learn to architect the correct Topic, Partition, and Replication factor strategy for high-volume, low-latency use cases like clickstream analytics and IoT ingestion.

High-Throughput Producers

Master the critical Producer configurations - batching, compression, and asynchronous sending - to maximize message throughput while guaranteeing message delivery logic.

Fault-Tolerant Consumers

Learn to design Consumer Groups for parallel processing, manage offsets correctly, and implement effective retry and error handling to guarantee data processing completeness.

Cluster Broker Management

Gain mandatory skills in managing Kafka brokers, including configuring logs, understanding critical internal metrics, and performing seamless rolling restarts and capacity upgrades.

Data Ingestion and Export (Kafka Connect)

Master the use of Kafka Connect to integrate Kafka with external systems (Databases, S3, HDFS), eliminating brittle, custom-coded ETL jobs.

Stream Processing (Kafka Streams/KSQL)

Learn to perform in-flight data transformations, aggregations, and joins using Kafka Streams or KSQL, enabling real-time analytics and decision-making on live data.

Who This Program Is For

Software Engineers (Java/Python)

Data Engineers / ETL Developers

Solution Architects

DevOps Engineers / System Administrators

Data Warehouse Developers

Technical Leads

If you lead projects and meet PMI's mandatory experience requirements, this program is engineered to get you certified.

Apache Kafka Certification Training Program Roadmap

Why get Apache Kafka certified?

Stop getting filtered out by HR bots

Get the senior Data Streaming and Event-Driven Architecture interviews your skills already deserve.

Unlock the higher salary bands and specialized bonuses

Gain access to bonus structures reserved for certified experts who guarantee the performance and stability of real-time pipelines.

Transition to low-latency, strategic event-driven design

Transition from batch processing to strategic design, becoming a critical part of the modern enterprise backbone.

Eligibility & Prerequisites

While Kafka is open-source, the most respected certifications are provided by Confluent (the company founded by Kafka's creators). To sit for the Confluent Certified Developer or Administrator exams, you typically need:

Formal Training/Experience: Completion of 40+ hours of dedicated Kafka training, covering architecture, APIs, Connect, and Streams, is the minimum expectation (satisfied by this course).

Coding Proficiency: For the Developer certification, mandatory, demonstrable ability to code Producers and Consumers in a modern language (Java/Python) is essential.

Practical Deployment: For the Administrator certification, proven hands-on experience in setting up, monitoring, and troubleshooting multi-broker clusters. Our labs provide this rigorous exposure.

Course Modules & Curriculum

Lesson 1: Consumer API and Consumer Groups

Master the Consumer API (Java/Python). Design Consumer Groups for scalable, parallel reading of partitions. Learn client-side configuration for optimal fetch sizes and time-outs.

Lesson 2: Offset Management and Delivery Semantics

Understand where offsets are stored and how to commit them correctly (automatic vs. manual). Implement robust code to achieve "exactly-once" processing using transaction IDs and external stores.

Lesson 3: Advanced Consumer Rebalancing and Lag Monitoring

Troubleshoot consumer rebalances and lag issues. Configure session time-outs and heartbeats to maintain stability in dynamic production environments. This module also aligns with insights from advanced apache kafka books and prepares you for practical apache kafka certification exams.

Lesson 1: Kafka Connect for Data Integration

Master the architecture of Kafka Connect (Source and Sink Connectors). Learn to deploy, configure, and monitor Connect workers for reliable database and storage integration.

Lesson 2: Introduction to Kafka Streams and KSQL

Understand the purpose of stream processing. Get introduced to the Kafka Streams DSL (KStream, KTable) for simple filtering, transformation, and aggregation.

Lesson 3: Advanced Stream Processing and Windowing

Master stateful operations, joins, and aggregations using fixed and hopping time windows - the non-negotiable techniques for complex real-time analytics.

Lesson 1: Cluster Operations and Maintenance

Learn mandatory administrator tasks for Apache Kafka clusters: rolling restarts, log size monitoring, broker decommissioning, and essential command-line health checks. This practical module aligns with apache kafka tutorials and prepares you for real-world operations in a apache kafka course or apache kafka certification.

Lesson 2: Performance Monitoring and Load Testing

Identify critical metrics (Under-replicated partitions, lag, request latency). Learn to use monitoring tools (Prometheus, JMX) and load testing to validate performance and capacity.

Lesson 3: Kafka Security and Failure Recovery

Understand security layers (SSL/TLS for encryption, SASL for authentication). Master critical failure scenarios: partition leader failure, full disk, and recovery procedures.