Big Data Hadoop Training Program Overview Asheville, NC

You've witnessed the Big Data explosion. Your SQL servers can't handle today's massive data streams, and your manual ETL jobs are breaking under pressure. While your data warehousing skills still hold value, they're quickly becoming obsolete in an era dominated by Big Data technologies and cloud-driven ecosystems. Meanwhile, enterprises in Hyderabad, Bengaluru, and Delhi are aggressively hiring professionals who can process and analyze terabytes of streaming data - from IoT devices, retail transactions, and social media interactions - using cutting-edge big data analytics tools. These roles pay 40-60% higher big data engineer salaries for professionals certified in Hadoop, Spark, and Hive. You're currently stuck managing outdated systems, while recruiters are looking for candidates with validated expertise in Hadoop, Spark, Hive, and Impala. Without certification, your resume is filtered out long before an interview for those high-value big data engineer jobs or big data developer roles. This isn't a superficial course on buzzwords. Our Hadoop training program is engineered for deep, practical mastery of Big Data analytics and architecture. You'll understand the real-world trade-offs between HDFS, MapReduce, Spark, and NoSQL databases like HBase. You'll design scalable ingestion pipelines using Flume and Kafka, optimize Hive queries to reduce cloud costs by up to 30%, and gain the ability to architect big data business analytics systems that deliver both performance and efficiency. Our curriculum is designed specifically for IT professionals, BI developers, and database administrators across Asheville, NC who want to make a strategic leap into the Big Data engineer role. It's led by experts who have built and maintained production clusters on AWS, Azure, and on-premise environments. We skip the academic fluff and focus entirely on what matters: practical, enterprise-scale data engineering. This is your chance to move from outdated systems to modern, distributed architectures - and secure the Big Data certification that proves you can design and maintain the data backbone of a modern enterprise.

Big Data Hadoop Training Course Highlights Asheville, NC

Production-Ready Project Portfolio

Complete a major project integrating HDFS, Spark, Hive, and a scheduler like Oozie, giving you tangible proof of capability for your next job interview.

Deep Cluster Administration Focus

Dedicated modules on multi-node setup, monitoring, troubleshooting, and Zookeeper management, preparing you for a real Data Architect or Administrator role.

2000+ Scenario-Based Questions

Cut through the generic exam prep. Our question bank is engineered to test your understanding of architectural choices and real-world failure scenarios.

Optimized Learning Path

A rigid, 6-week curriculum designed by industry leads to take you from legacy data skills to production-ready Hadoop/Spark expertise with no wasted time.

Cloud & Infrastructure Agnostic Skills

While we use EC2 for setup, the core skills in HDFS, MapReduce, and Spark architecture are portable, protecting your skills from platform shifts.

24x7 Expert Guidance & Support

Get immediate, high-quality answers to your complex architectural and setup questions from actively practicing senior data engineers.

Corporate Training

Ready to transform your team?

Get a custom quote for your organization's training needs.

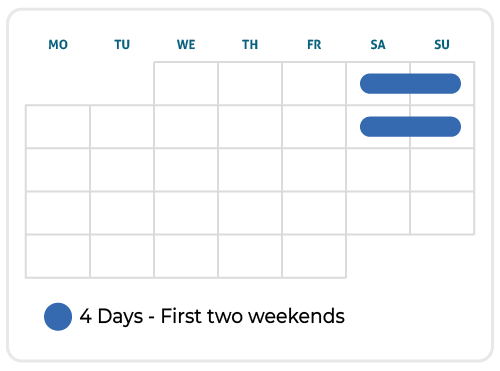

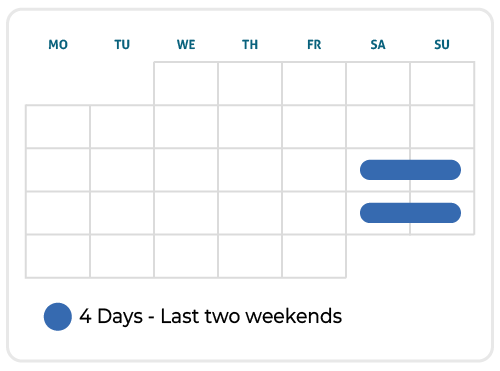

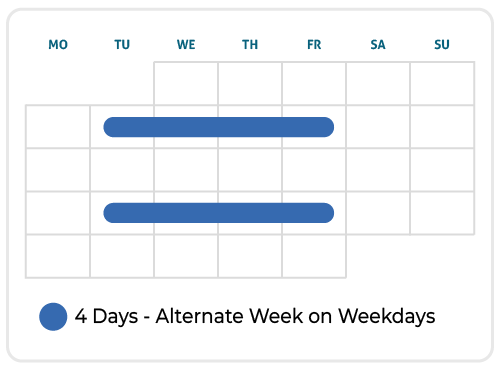

Upcoming Schedule

Skills You Will Gain In Our PMP Training Program

Risk Management

You'll learn to anticipate data node failures, replication issues, and resource contention in YARN. You will learn to architect for high availability and fault tolerance, not just implement a basic setup.

Cluster Optimization

Stop running expensive, slow jobs. You will master techniques for partitioning, bucketing, indexing, and cost-based query optimization in Hive and Impala to deliver results in seconds, not hours.

Real-Time Data Ingestion

Move beyond static batch processing. You will implement robust, fault-tolerant pipelines using tools like Flume and Spark Streaming to handle live data feeds from thousands of sources.

Distributed Programming

Go deeper than basic word counts. You will master the fundamentals of MapReduce and the advanced, in-memory processing capabilities of Apache Spark (Scala/Python) for complex iterative algorithms.

Ecosystem Integration

The real challenge is connecting the dots. You will learn how to orchestrate complex workflows using Oozie, manage configuration with Zookeeper, and ensure seamless ETL connectivity across the entire stack.

Troubleshooting & Monitoring

Become the go-to expert who fixes broken clusters. You will gain practical skills in diagnosing HDFS failures, YARN resource deadlocks, and common performance bottlenecks using industry-standard monitoring tools.

Who This Program Is For

Database Administrators (DBAs)

BI/ETL Developers

Senior Software Engineers

Data Analysts

IT Architects

Tech Leads

If you lead projects and meet PMI's mandatory experience requirements, this program is engineered to get you certified.

Big Data Hadoop Certification Training Program Roadmap city83647

Why get Big Data Hadoop-certified?

Stop getting filtered out by HR bots

Get the senior-level interviews for Data Architect and Big Data Lead roles your experience already deserves.

Unlock the higher salary bands

Unlock the higher salary bands and bonus structures reserved for certified professionals who can manage petabyte-scale infrastructure.

Transition from tactical ETL developer to strategic data platform designer

Transition from tactical ETL developer to strategic data platform designer, gaining a seat at the architecture decision-making table.

Eligibility & Prerequisites

There is no single governing body like PMI for all Big Data certifications, but the most respected vendor-neutral and vendor-specific exams (e.g., Cloudera, Hortonworks/MapR) typically require:

Formal Training: Completion of a comprehensive program covering the entire ecosystem (HDFS, YARN, MapReduce, Spark, Hive, etc.). Our 40+ hour training satisfies this requirement.

Deep Technical Experience: For vendor certifications, they expect candidates to have spent significant time in a production environment. Our curriculum simulates this experience through complex, integrated projects.

Programming Proficiency: Mandatory hands-on experience in a programming language like Python or Scala for writing Spark applications. This is heavily emphasized in our practical lab sessions.

Course Modules & Curriculum

Lesson 1: Deep Dive in MapReduce & Graph Problem Solving

Optimize custom partitioners, combiners, and reducers for performance. Tackle complex distributed patterns like graph traversal and joining datasets.

Lesson 2: Detailed Understanding of Pig

Introduction to Pig Latin. Deploying Pig for data analysis and complex data processing. Performing multi-dataset operations and extending Pig with UDFs.

Lesson 3: Detailed Understanding of Hive

Hive Introduction and its use for relational data analysis. Data management with Hive, including partitioning, bucketing, and basic query execution.

Lesson 1: Impala, Data Formats & Optimization

Introduction to Impala for low-latency querying. Choosing the best tool (Hive, Pig, Impala). Working with optimized data formats like Parquet and AVRO.

Lesson 2: Optimization and Extending Hive

Master UDFs, UDAFs, and critical query optimization techniques (e.g., vectorization, execution plans) to cut down query times and resource usage.

Lesson 3: Introduction to Hbase Architecture & NoSQL

Understand the evolution from relational models to NoSQL databases within the Big Data ecosystem. Deep dive into HBase architecture, mastering data modeling concepts, and efficient read/write operations for key-value data storage. Learn how HBase powers real-time analytics pipelines and supports scalable, high-throughput data access - critical for organizations implementing modern big data analytics solutions.

Lesson 1: Why Spark? Explain Spark and HDFS Integration

Understand the performance bottleneck of MapReduce and the rise of in-memory computing with Spark. Spark components and common Spark algorithms.

Lesson 2: Running Spark and Writing Applications

Setting up and running Spark on a cluster. Writing core Spark applications using RDDs, DataFrames, and DataSets in Python (PySpark) or Scala.

Lesson 3: Advanced Spark & Stream Processing

Applying Spark for iterative algorithms, graph analysis (GraphX), and Machine Learning (MLlib). Introduction to Spark Streaming for real-time data ingestion.

Lesson 1: Cluster Setup & Configuration

Detailed, multi-node cluster setup on platforms like Amazon EC2. Core configuration of HDFS and YARN for production readiness.

Lesson 2: Hadoop Administration, Monitoring, and Scheduling

Hadoop monitoring and troubleshooting. Understanding Zookeeper and advanced job scheduling with Oozie for complex, interdependent workflows.

Lesson 3: Testing, Advance Tools & Integration

Learn how to validate, test, and integrate Big Data applications for enterprise reliability. Explore unit testing with MRUnit for MapReduce jobs, leverage Flume for data ingestion, and manage your ecosystem with HUE. Understand full-stack integration testing across the Hadoop ecosystem, and the key responsibilities of a Hadoop Tester in modern Big Data analytics environments