Apache Spark & Scala Certification Training Program Overview San Antonio, TX

Your current data infrastructure struggles with growing volumes. Batch processes take hours, and management demands real-time insights - a need your legacy ETL or Python setup cannot meet. Modern Apache Spark-driven big data roles in San Antonio, TX and other competitive markets require engineers who can design high-performance, fault-tolerant pipelines using Scala. Without skills in Spark, Scala, and DataFrame Optimization, HR filters reject your resume from high-paying Senior Data Engineer and Machine Learning Engineer positions. This program equips you to solve billions of real-time events efficiently. This isn't a basic apache spark tutorial. Our Apache Spark course is designed by experienced Big Data Architects managing multi-terabyte Spark clusters in San Antonio, TXFintech and Telecom sectors. You'll master core performance concepts like handling skew, optimizing joins, managing garbage collection, and understanding when to use RDDs versus DataFrames - insights drawn from apache spark documentation and apache spark architecture best practices. Through hands-on labs with Spark Shell and advanced IDEs, you'll tackle real-world apache spark big data projects such as collaborative filtering and large-scale SQL queries. This apache spark certification ensures you're ready for top apache spark interview questions and positions you for sub-second response systems critical in modern enterprises.

Apache Spark & Scala Certification Course Highlights San Antonio, TX

Deep-Dive into Spark Internals

Mandatory modules on the Spark execution flow, DAGScheduler, TaskScheduler, and Memory Management to ensure you can optimize any job.

Mastery of Advanced Spark Components

Dedicated hands-on training in Spark Streaming, MLlib (Machine Learning), and GraphX for complete, full-spectrum application development.

2000+ Performance-Focused Questions

Our question bank is engineered to test your ability to debug performance issues, select optimal Spark/Scala syntax, and choose the best data structure for the task.

Rigorous Scala Programming Fluency

Achieve the required level of Scala competence to write concise, functional, and enterprise-grade code, maximizing Spark's native efficiency.

End-to-End Optimization Techniques

Learn the most critical optimization skills: caching strategies, serialization choices (Kryo), and data partitioning to cut down execution time by orders of magnitude.

24x7 Expert Guidance & Support

Get immediate, high-quality help from certified Senior Data Engineers on complex code debugging, performance tuning, and architectural design questions.

Corporate Training

Ready to transform your team?

Get a custom quote for your organization's training needs.

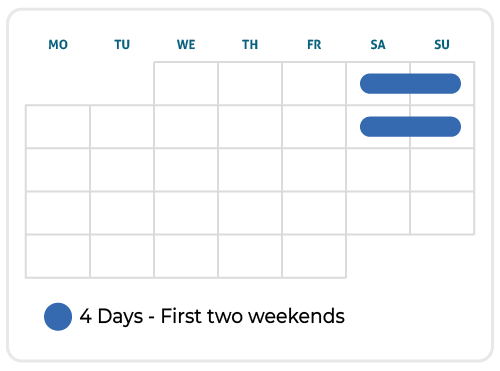

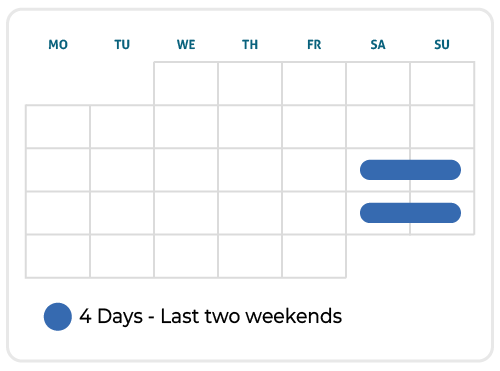

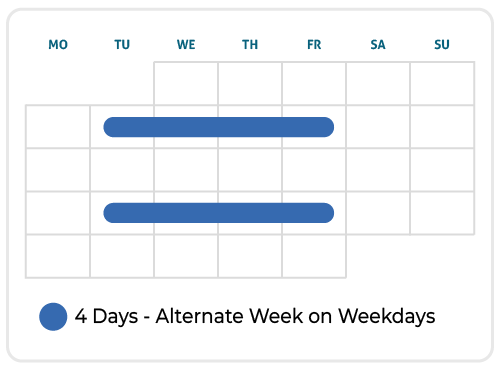

Upcoming Schedule

Skills You Will Gain In Our Apache Spark & Scala Training Program city83647

Spark Core Mastery & RDDs

Learn the foundational Spark architecture, lazy evaluation, and immutability. You will master RDD transformations and actions, understanding when this lower-level API is mandatory for complex tasks.

Functional Scala Programming

Achieve proficiency in the Scala language - including case classes, pattern matching, and functional constructs - to write clean, concurrent, and bug-resistant Spark applications.

Spark SQL & DataFrame Optimization

Master the highly efficient DataFrame/DataSet APIs. You will use Spark SQL for structured data, learning to leverage the Catalyst Optimizer for mandatory, high-speed query execution.

Real-Time Data Streaming

Go live. Implement Spark Streaming and Structured Streaming for continuous data processing, learning techniques for state management and handling event-time windows for accurate, real-time analytics.

Distributed Machine Learning (MLlib)

Deploy scalable ML models. You will use MLlib to implement algorithms like collaborative filtering and classification across massive datasets, turning raw data into predictive assets.

Graph Processing (GraphX)

Tackle complex network analysis. You will utilize GraphX for use cases like social network analysis and supply chain optimization, extending your skills to complex relationship data structures.

Who This Program Is For

Java/Python Developers (3+ years experience)

ETL/BI Developers

Big Data Engineers (Hadoop)

Data Scientists

Software Architects

Technical Leads

If you lead projects and meet PMI's mandatory experience requirements, this program is engineered to get you certified.

Apache Spark & Scala Certification Training Program Roadmap San Antonio, TX

Why get Apache Spark & Scala certified?

Stop getting filtered out by HR bots

Get the senior-level, high-performance Data Engineer interviews your experience already deserves.

Unlock the higher salary bands and specialized bonuses

Reserved for engineers who can guarantee sub-second latency on massive-scale data.

Transition from maintenance programmer to a high-impact architect

Owning the execution engine that powers all enterprise analytics.

Eligibility & Prerequisites

While vendor-neutral certification is less common, the most respected proofs of competence come from organizations like Databricks or Confluent, or simply the demonstrable capability honed by this program.

Success hinges on:

Mandatory Scala Proficiency: Demonstrable ability to write efficient, clean, and functionally correct Scala code is non-negotiable for writing optimized Spark applications.

Spark Architectural Mastery: Proven deep understanding of the Spark execution model (DAG, memory, partitioning) and the trade-offs between RDDs, DataFrames, and DataSets.

Hands-on Component Deployment: Mandatory experience in using Spark SQL for complex queries, Spark Streaming for real-time applications, and MLlib for distributed machine learning.

Course Modules & Curriculum

Lesson 1: Scope Management Systems

Scope management is the backbone of successful project execution - and a key topic covered in every Project Management Professional course online and in the PMP exam questions. Learn to define project boundaries with precision and prevent costly scope creep.

Lesson 2: Schedule Development & Control

Time management is one of the most heavily weighted areas in the PMP exam content outline. This lesson trains you to build and control project schedules that meet deadlines without sacrificing quality.

Lesson 3: Cost Management & Earned Value

Develop accurate cost estimates using proven methodologies and track real project performance through Earned Value Management. Learn to create meaningful budgets, analyze variances, and communicate financial status to stakeholders in terms they understand and act upon.

Lesson 1: Risk Management Framework

Identify what can derail your projects before it happens and build comprehensive response strategies. Master both qualitative and quantitative risk analysis techniques, including Monte Carlo simulations and decision trees that enable data-driven risk decisions.

Lesson 2: Quality Management Systems

Build quality into your processes rather than inspecting it later. Learn the difference between quality planning, assurance, and control. Master quality tools like control charts and Pareto analysis to drive continuous improvement and prevent costly rework.

Lesson 3: Procurement & Contract Management

Procurement is a key area of the Project Management Professional exam and essential to professional project delivery. Learn to manage vendor contracts, conduct negotiations, and select the right contract types. This PMP course online module teaches practical approaches to vendor evaluation, risk allocation, and performance monitoring, ensuring your projects stay on schedule and within budget.

Lesson 1: Project Execution Leadership

Lead project teams through successful delivery while managing resources, resolving issues, and maintaining momentum. Learn to direct project work effectively, acquire and develop team members, and create reporting systems that inform rather than overwhelm stakeholders.

Lesson 2: Monitoring & Control Systems

Implement control systems that catch problems early and enable corrective action. Master integrated change control procedures, performance measurement techniques, and variance analysis methods that keep projects on track and stakeholders informed.

Lesson 3: Agile & Hybrid Approaches

Modern project management requires agility. This PMP certification course explores agile, predictive, and hybrid delivery approaches - helping you understand when and how to apply each. Learn Scrum ceremonies, Kanban flow metrics, and hybrid governance techniques that integrate flexibility into traditional structures. These topics are a major part of the current Project Management Professional exam content outline, making this lesson essential for every PMP-certified professional.

Lesson 1: Project Closing & Professional Responsibility

Execute proper project closure procedures and understand your ethical obligations as a certified project management professional. Learn to capture lessons learned effectively, manage contract closure, and navigate ethical dilemmas using the PMI Code of Ethics.

Lesson 2: Exam Strategy & Practice

Develop test-taking strategies specifically designed for the PMP exam format. Learn question analysis techniques, time management strategies, and how to approach situational questions that test your judgment rather than just knowledge recall.

Lesson 3: Final Review & Certification Readiness

This capstone lesson brings everything together. You'll review every process group, knowledge area, and agile concept included in the PMP course online curriculum. Our instructors guide you through final assessments, identify weak areas, and ensure full exam readiness.

Lesson 1: Introduction to Spark Architecture

Understand the limitations of MapReduce and the rise of in-memory computing with Apache Spark. Master the Spark cluster components: Driver, Executor, Cluster Manager, and the critical DAGScheduler. This foundational lesson is essential for any apache spark course or apache spark certification candidate.

Lesson 2: Introduction to Programming in Scala

Master the functional programming fundamentals of Scala, including immutable variables, functions, closures, and the use of the Scala REPL/IDE for development.

Lesson 3: Advanced Scala Functional Programming

Dive deeper into Scala for Spark with case classes, pattern matching, collections, and higher-order functions. Mastering these concepts ensures you can write concise, high-performance distributed code, aligning with best practices from apache spark documentation and advanced apache spark tutorials.

Lesson 1: Using RDD for Creating Applications in Spark

Master the core Resilient Distributed Dataset (RDD) API. Understand fault tolerance, partitioning, and caching, the foundation for all Spark computations.

Lesson 2: RDD Transformations and Actions

Hands-on implementation of the core RDD operations: map, filter, reduceByKey, join, and their critical distinction between narrow and wide dependencies.

Lesson 3: Spark Optimization and Performance Tuning (Core)

Learn mandatory core optimization: choosing the correct Storage Level, using Kryo Serialization for speed, and managing the critical trade-offs between partitioning and memory.

Lesson 1: Running SQL Queries Using Spark SQL

Master Apache Spark SQL by creating and using DataFrames and DataSets. Understand their memory-efficient, strongly-typed nature and how structured data improves performance in apache spark big data projects. This lesson is essential for apache spark course participants preparing for apache spark certification.

Lesson 2: The Catalyst Optimizer and Query Tuning

Deep dive into the Catalyst Optimizer and Tungsten execution engine. Learn how to interpret query plans, debug performance, and select the optimal join strategies.

Lesson 3: Advanced DataFrame Operations and Window Functions

Master complex DataFrame manipulations including UDFs (User-Defined Functions) and advanced windowing functions for rolling aggregations and ranking. This expertise is vital for enterprise reporting and real-world apache spark big data applications.

Lesson 1: Spark Streaming and Structured Streaming

Understand the difference between micro-batching and continuous processing. Implement Structured Streaming for fault-tolerant, end-to-end real-time pipelines.

Lesson 2: Distributed Machine Learning with Spark MLlib

Master the MLlib API. Implement and evaluate core algorithms like Linear Regression, Logistic Regression, and Collaborative Filtering across large-scale datasets.

Lesson 3: Feature Engineering and ML Pipeline

Learn the mandatory steps of building a robust ML pipeline: feature selection, scaling, model training, and persistent storage of models for deployment.

Lesson 1: Spark GraphX Programming

Master the GraphX API in Apache Spark for advanced graph analysis. Implement algorithms like PageRank and community detection for applications in social networks, telecom, and other apache spark big data projects. This is a key skill for apache spark certification and apache spark interview questions.

Lesson 2: Ecosystem Integration and Deployment

Connect Spark with external systems: Kafka for ingestion, HDFS/S3 for storage, and Hive/Impala for querying. Master deployment on YARN or Kubernetes.

Lesson 3: Production Tuning and Debugging

Master production-level skills including cluster sizing, monitoring with Prometheus, memory and garbage collection management, and interpreting Spark UI metrics. These advanced capabilities are essential for real-world apache spark course participants and high-value apache spark certification candidates.