Quick Enquiry Form

Categories

- Agile and Scrum (237)

- BigData (43)

- Business Analysis (97)

- Cirtix Client Administration (55)

- Cisco (63)

- Cloud Technology (117)

- Cyber Security (68)

- Data Science and Business Intelligence (66)

- Developement Courses (56)

- DevOps (17)

- Digital Marketing (66)

- Emerging Technology (209)

- IT Service Management (79)

- Microsoft (54)

- Other (396)

- Project Management (518)

- Quality Management (154)

- salesforce (67)

Latest posts

AWS Basics What is Amazon..

Blockchain Categories and Why They..

Top Tableau Q and A..

Free Resources

Subscribe to Newsletter

Top Tableau Q and A for Freshers and Experts in 2025

In this Tableau interview questions and answers blog, I have put together the most usual interview questions. The questions were taken from interviews with professionals who work on data analysis and chart or graph design. If you'd like to work your way through the basics of Tableau (which I recommend doing prior to reading this list of interview questions for beginners and experts), work your way through the Tableau Tutorial blog.

1. What are Traditional BI Tools, and how do they differ from Tableau?

Following is a general comparison of how Traditional Business Intelligence (BI) Tools vary from Tableau:

- Legacy BI Tools/Tableau

- Rely greatly on hardware and hence less adaptable.

- No hardware dependency—more flexible and scalable.

- Constructed using an integration of several various technologies. Employs quick and dynamic associative search technology.

- Usually do not facilitate multi-core processing or in-memory computing.

- Supports in-memory computing when combined with modern technologies.

- Provides a fixed view of the data, limiting the scope of analysis.

- Supports predictive analysis to enable more intelligent business decisions.

2. What is Tableau?

Tableau is a robust business intelligence (BI) and data visualization software that enables one to visualize his or her data easily and succinctly. It:

• Conveniently interfaces with different kinds of data sources.

• Transforms raw data into sharable and interactive visual reports.

•Streamlines user decision-making with simple dashboards and clear data stories.

3. What are the Various Tableau Products and the Latest Version?

Tableau Online is the cloud-based version of Tableau Server. Below is a brief overview of each of them:

1. Tableau Desktop

Tableau Desktop is a program which enables you to create charts, graphs, and dashboards without needing to write code. You can connect to your data sources directly, analyze live data, and create interactive reports. It allows you to bring data from numerous different sources and bring them all together in one location.

2. Tableau Server

Tableau Server is for companies. Once you build dashboards in Tableau Desktop, you can share and publish them securely with your staff members through Tableau Server. It has live data and enables teams to make faster decisions.

3. Tableau Online

Tableau Online is the cloud offering of Tableau Server. Nothing to install—Tableau hosts it. Simply publish your dashboards from Tableau Desktop and share them with others online, anywhere.

4. Tableau Public

Tableau Public is free. You can publish and share visualizations, but your work is hosted online and available to everyone. It is suitable for learning or publishing public data, but not private business data.

4. What are the Various Types of Data in Tableau?

Tableau has various data types. Each data type assists Tableau to understand how to show and handle the data. The below are the primary data types supported by Tableau:

1. Text (String)

Used for words, names, sets, or for any combination of numbers and letters (e.g., "New York," "Product A").

2. Whole and Decimal Numbers

• Integer (Whole Number) – For counting (e.g., 100, -5)

• Decimal (Floating Point) – For numbers with decimals (e.g., 10.5, 3.14)

3. Date and Date & Time

Applied to calendar dates:

• Date – i.e., 2025-06-30

• Date & Time – i.e., 2025-06-30 14:30:00

4. Boolean

A value of true or false. Used in logic-based areas and filters.

5. Geography

• Used to plot data. Contains fields like:

• Country

• Region/Area

• Town

• Zip code

• Latitude/Longitude

6. Cluster Group (Generated)

Provided when Tableau groups similar data points. These are not typed out manually; instead, Tableau gives them whenever it does cluster or group analysis.

5. What Are Measures and Dimensions in Tableau?

In Tableau, data is separated into Measures and Dimensions:

Sizes

• They are descriptive fields—such as names, dates, or categories.

• They define "what" the data is describing (e.g., Product Name, Country, or Category).

• Dimensions allow you to slice and aggregate data in your charts.

• For example, a Product dimension can have Product Name, Type, and Color.

Steps

• These are numbers that can be added up or counted—such as totals, averages, or numbers.

• Measurements are employed to perform calculations and analysis.

• Example: Sales, Quantity Sold, and Profit are indicators.

Quick Example:

In a Sales dataset:

• Dimensions = Customer Name, Region, Product Category

• Measurements = Total Sales, Units Sold, Profit Margin

6. What is the difference between .twb and .twbx files in Tableau?

- File Type Description

- .twb (Tableau Workbook)

- An XML file that contains your visualizations, layouts, and settings but not the data.

- .twbx (Tableau Packaged Workbook) is a zipped file containing the .twb file along with any data sources, images, or custom components. It is optimal to share.

- Tip: Use .twbx when you export your work to another individual—it keeps it all together.

7 . What different join methods can you use in Tableau?

Similar to SQL, Tableau also has four primary join types to combine information from two tables:

- Join Type Explanation

- Inner Join

- Returns only the matching rows from both tables.

- Left Join includes all records from the left table and matched records from the right table.

- Right Join produces the rows from the right table and the matching rows from the left table.

- Full Outer Join

- Produces all rows if there is a match in the left or the right table.

- These joins help you combine datasets to produce more informed visuals and findings.

8. How Many Tables Can I Join in Tableau?

In Tableau, you are allowed to join a maximum of 32 tables within a single data source. This assists in building rich datasets by joining various tables, such as joins in SQL.

9. What are the Various Types of Data Connections in Tableau?

Tableau connects you to your data in two primary ways:

1. Live Link

• Points directly to the data source.

• Queries the database in real-time.

• Displays latest data automatically.

• Helpful when dealing with ever-changing data.

2. Extract Connection

• Generates a static version of your data.

• Stored in Tableau's high-performance, in-memory data engine.

• Is updated manually or updated at regular intervals.

• Offline-capable—ideal for performance and portability.

Which to Use

• Use Live when you want updates immediately.

• Use Extract when performance is critical or if you're operating offline.

10. What Is Shelves in Tableau?

Shelves are special spaces in Tableau where you can drag data fields in an effort to construct your visuals.

Shared Shelves:

• Columns Shelf – Places data fields along the top (horizontal axis).

• Rows Shelf – Places data fields along the side (y-axis).

• Shelf Filters – Displays filtered data in the view.

• Pages Shelf – Splits a view into multiple pages.

• Marks Card – Determines the way marks appear (colour, size, label, etc.).

Note: Certain shelves appear only if you select specific mark types (such as bar, line, or circle).

How to obtain Tableau certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Tableau is an excellent tool to present data in a graphical manner. It has handy features such as dashboards, data connections, and interactive reports. Knowledge of the key concepts such as dimensions, measures, sets, and joins prepares you to produce useful insights. Knowledge of these fundamentals prepares you for Tableau interviews and real-world use.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

Read More

In this Tableau interview questions and answers blog, I have put together the most usual interview questions. The questions were taken from interviews with professionals who work on data analysis and chart or graph design. If you'd like to work your way through the basics of Tableau (which I recommend doing prior to reading this list of interview questions for beginners and experts), work your way through the Tableau Tutorial blog.

1. What are Traditional BI Tools, and how do they differ from Tableau?

Following is a general comparison of how Traditional Business Intelligence (BI) Tools vary from Tableau:

- Legacy BI Tools/Tableau

- Rely greatly on hardware and hence less adaptable.

- No hardware dependency—more flexible and scalable.

- Constructed using an integration of several various technologies. Employs quick and dynamic associative search technology.

- Usually do not facilitate multi-core processing or in-memory computing.

- Supports in-memory computing when combined with modern technologies.

- Provides a fixed view of the data, limiting the scope of analysis.

- Supports predictive analysis to enable more intelligent business decisions.

2. What is Tableau?

Tableau is a robust business intelligence (BI) and data visualization software that enables one to visualize his or her data easily and succinctly. It:

• Conveniently interfaces with different kinds of data sources.

• Transforms raw data into sharable and interactive visual reports.

•Streamlines user decision-making with simple dashboards and clear data stories.

3. What are the Various Tableau Products and the Latest Version?

Tableau Online is the cloud-based version of Tableau Server. Below is a brief overview of each of them:

1. Tableau Desktop

Tableau Desktop is a program which enables you to create charts, graphs, and dashboards without needing to write code. You can connect to your data sources directly, analyze live data, and create interactive reports. It allows you to bring data from numerous different sources and bring them all together in one location.

2. Tableau Server

Tableau Server is for companies. Once you build dashboards in Tableau Desktop, you can share and publish them securely with your staff members through Tableau Server. It has live data and enables teams to make faster decisions.

3. Tableau Online

Tableau Online is the cloud offering of Tableau Server. Nothing to install—Tableau hosts it. Simply publish your dashboards from Tableau Desktop and share them with others online, anywhere.

4. Tableau Public

Tableau Public is free. You can publish and share visualizations, but your work is hosted online and available to everyone. It is suitable for learning or publishing public data, but not private business data.

4. What are the Various Types of Data in Tableau?

Tableau has various data types. Each data type assists Tableau to understand how to show and handle the data. The below are the primary data types supported by Tableau:

1. Text (String)

Used for words, names, sets, or for any combination of numbers and letters (e.g., "New York," "Product A").

2. Whole and Decimal Numbers

• Integer (Whole Number) – For counting (e.g., 100, -5)

• Decimal (Floating Point) – For numbers with decimals (e.g., 10.5, 3.14)

3. Date and Date & Time

Applied to calendar dates:

• Date – i.e., 2025-06-30

• Date & Time – i.e., 2025-06-30 14:30:00

4. Boolean

A value of true or false. Used in logic-based areas and filters.

5. Geography

• Used to plot data. Contains fields like:

• Country

• Region/Area

• Town

• Zip code

• Latitude/Longitude

6. Cluster Group (Generated)

Provided when Tableau groups similar data points. These are not typed out manually; instead, Tableau gives them whenever it does cluster or group analysis.

5. What Are Measures and Dimensions in Tableau?

In Tableau, data is separated into Measures and Dimensions:

Sizes

• They are descriptive fields—such as names, dates, or categories.

• They define "what" the data is describing (e.g., Product Name, Country, or Category).

• Dimensions allow you to slice and aggregate data in your charts.

• For example, a Product dimension can have Product Name, Type, and Color.

Steps

• These are numbers that can be added up or counted—such as totals, averages, or numbers.

• Measurements are employed to perform calculations and analysis.

• Example: Sales, Quantity Sold, and Profit are indicators.

Quick Example:

In a Sales dataset:

• Dimensions = Customer Name, Region, Product Category

• Measurements = Total Sales, Units Sold, Profit Margin

6. What is the difference between .twb and .twbx files in Tableau?

- File Type Description

- .twb (Tableau Workbook)

- An XML file that contains your visualizations, layouts, and settings but not the data.

- .twbx (Tableau Packaged Workbook) is a zipped file containing the .twb file along with any data sources, images, or custom components. It is optimal to share.

- Tip: Use .twbx when you export your work to another individual—it keeps it all together.

7 . What different join methods can you use in Tableau?

Similar to SQL, Tableau also has four primary join types to combine information from two tables:

- Join Type Explanation

- Inner Join

- Returns only the matching rows from both tables.

- Left Join includes all records from the left table and matched records from the right table.

- Right Join produces the rows from the right table and the matching rows from the left table.

- Full Outer Join

- Produces all rows if there is a match in the left or the right table.

- These joins help you combine datasets to produce more informed visuals and findings.

8. How Many Tables Can I Join in Tableau?

In Tableau, you are allowed to join a maximum of 32 tables within a single data source. This assists in building rich datasets by joining various tables, such as joins in SQL.

9. What are the Various Types of Data Connections in Tableau?

Tableau connects you to your data in two primary ways:

1. Live Link

• Points directly to the data source.

• Queries the database in real-time.

• Displays latest data automatically.

• Helpful when dealing with ever-changing data.

2. Extract Connection

• Generates a static version of your data.

• Stored in Tableau's high-performance, in-memory data engine.

• Is updated manually or updated at regular intervals.

• Offline-capable—ideal for performance and portability.

Which to Use

• Use Live when you want updates immediately.

• Use Extract when performance is critical or if you're operating offline.

10. What Is Shelves in Tableau?

Shelves are special spaces in Tableau where you can drag data fields in an effort to construct your visuals.

Shared Shelves:

• Columns Shelf – Places data fields along the top (horizontal axis).

• Rows Shelf – Places data fields along the side (y-axis).

• Shelf Filters – Displays filtered data in the view.

• Pages Shelf – Splits a view into multiple pages.

• Marks Card – Determines the way marks appear (colour, size, label, etc.).

Note: Certain shelves appear only if you select specific mark types (such as bar, line, or circle).

How to obtain Tableau certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Tableau is an excellent tool to present data in a graphical manner. It has handy features such as dashboards, data connections, and interactive reports. Knowledge of the key concepts such as dimensions, measures, sets, and joins prepares you to produce useful insights. Knowledge of these fundamentals prepares you for Tableau interviews and real-world use.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

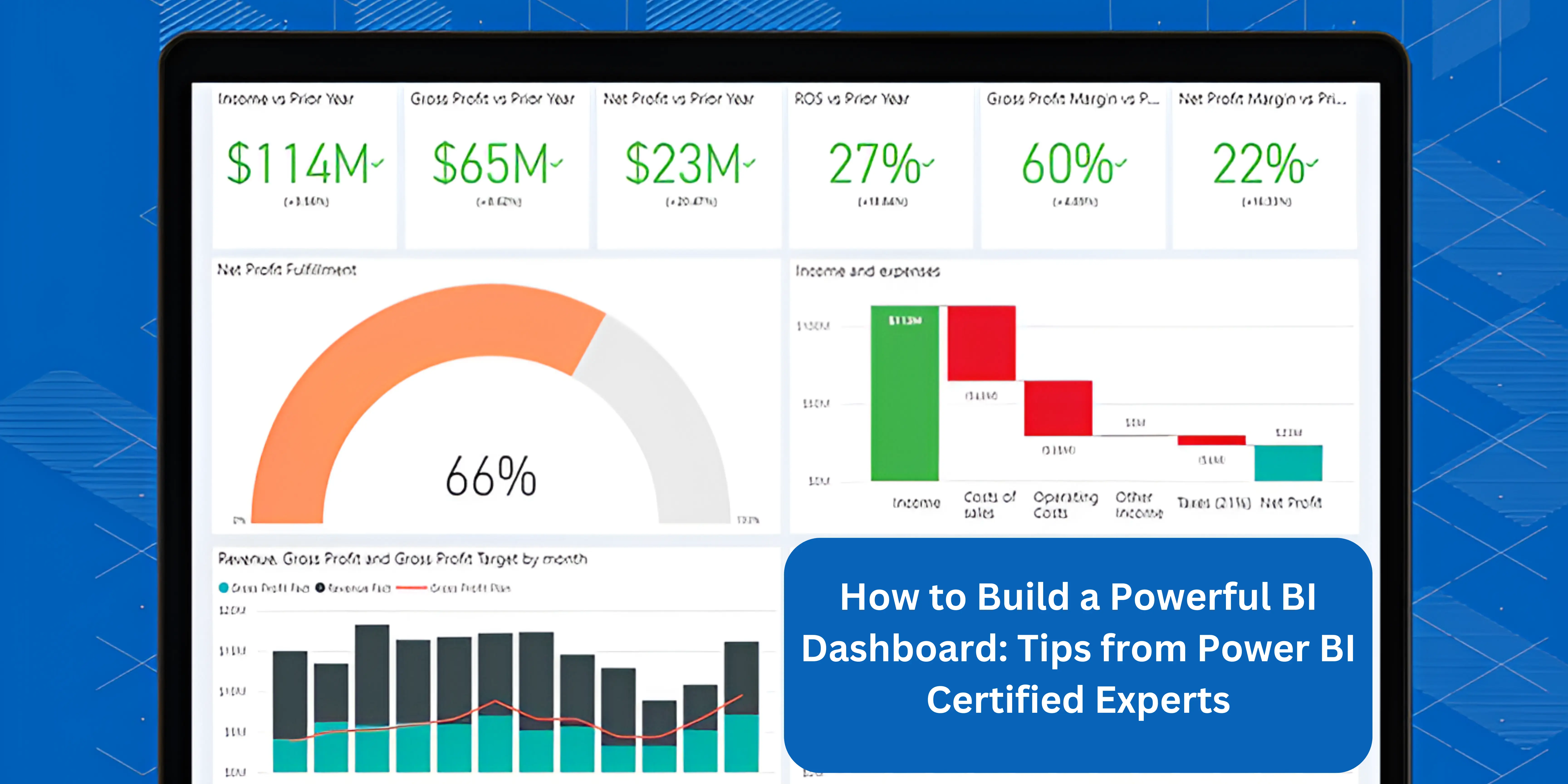

Power BI Interview Q and A Guide for 2025

Power BI is a common tool for data analysis and creating visual reports. It assists individuals in transforming intricate data into understandable and actionable insights in the form of dashboards and reports. As more businesses are reliant on data for making effective decisions, demand for Power BI experts is rising.

Basic Power BI Interview Questions

1. What is Power BI?

Power BI is a tool created by Microsoft that helps you work with data. You can bring in data from a large number of sources, clean it up, and build charts, graphs, and dashboards. You can use the visuals to uncover insights and make informed decisions. Access Power BI on your computer (Desktop), online via the cloud (Service), or through your smartphone (Mobile app).

2. What are the key components of Power BI?

Power BI has numerous tools by which you can work with data at ease. These are the primary components:

• Power Query: It assists you in retrieving data from various sources such as Excel, databases, or text files. You can also clean and shape the data to make it more usable.

• Power View: This enables you to create charts, tables, and graphs. It presents your data in a clear and fun manner, and you can filter to emphasize certain sections of the data.

• Power BI Desktop: It is a desktop tool where you can combine Power Query, Power View, and other features. It assists you in making reports and dashboards to better understand your data.

• Power BI Mobile: This is a mobile app available for Android, iOS, and Windows devices. It enables you to browse and share your reports and dashboards wherever you are.

• Power Map: This data visualization tool displays your data on a 3D map. You can see where the data is coming from, using locations like cities, states, or countries.

• Power Q&A: This feature lets you ask questions in simple English and receive answers instantly. It also gives you your replies in graphs or chart form, which is easier to understand.

3. What are the advantages of Power BI?

Power BI has numerous advantages:

• It is able to process a lot of data.

• You can create images, graphs, and charts from data using pre-formatted templates.

• It incorporates intelligent technology that gets better with frequent updates.

• You can even create your own dashboards to read and use.

• You can ask questions or do math in a unique language known as DAX.

4. What are the key differences between Power BI and Tableau?

Feature Power BI abd Tableau

Ease of Use

- Simpler to learn, particularly if you're used to Excel. Drag-and-drop functionality.

- Slightly harder to master, but gives more control over appearance.

Pricing

- Lower. Power BI Desktop is free; Pro is ~$10/month.

- Higher. Tableau Creator is ~$70/month.

- Power BI connects smoothly with Microsoft applications like Excel, Teams, and SharePoint. It integrates with most tools, but not as well with Microsoft.

Visuals

- Good visuals with interaction possibilities; there are also custom visuals.

- Better for intricate, custom, and high-end visuals.

- Performance is good with small and medium-sized data. It is able to process large data sets if configured properly.

Deployment

- Cloud-first, but you can host on your own servers too.

- Can be hosted on the cloud, on your servers, or online.

- Backed by an active Microsoft community and frequent feature updates. Also has a long-standing and active user base.

5. What is Power Query?

Power Query is something that assists you:

• Collect data from different sources

• Sanitize and update the data

• Integrate information from various places.

• Import the data into Power BI to visualize.

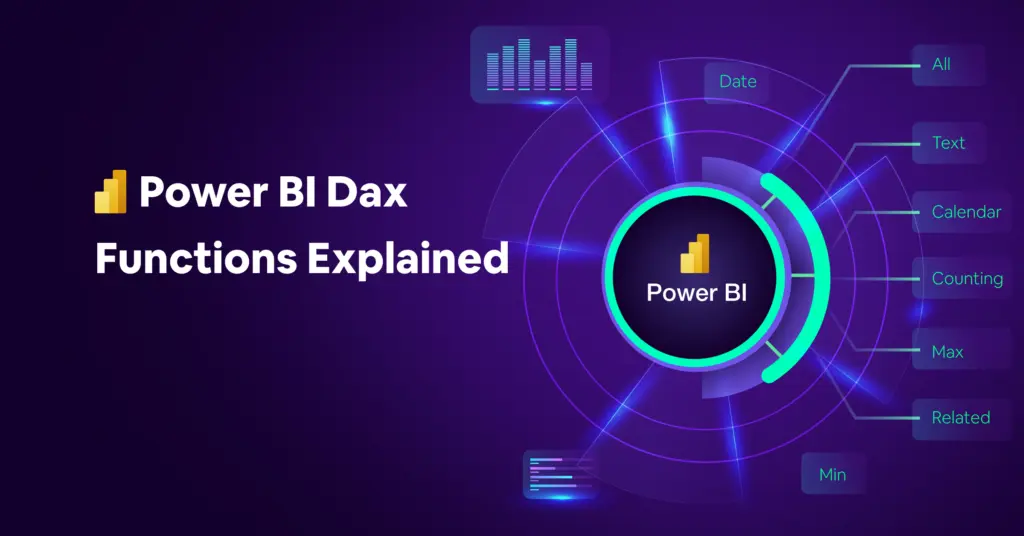

6. What is DAX?

DAX stands for Data Analysis Expressions. It is a formula language that is proprietary to Power BI. DAX enables you to:

• Learn complex math.

• Create your own tables and columns

• Formulate special reports using math and logic.

7. How is the attitude of people towards Power BI Desktop?

Power BI Desktop has four main views:

• Report View: Create reports and charts with graphics.

• Table View: View your data in a table (rows and columns) to sort, filter, or edit.

• Data View: Look at your real data carefully.

• Model View: Join different tables, calculate fields, and organize your data model.

8. In what way is a calculated column different from a measure?

• Calculated Column: This adds a new column to your table. It is calculated for each row when the data loads.

• Measure: A calculation only made when required, usually presented in charts or graphs. It is faster for large amounts or averages.

9. What is a Power BI dashboard?

A dashboard is a single page holding charts and graphics of multiple reports. It is a snapshot of key numbers (KPIs) and can be viewed only in Power BI Service (web version).

10. What are the filter types in Power BI?

• Visual-level filter: Changes a single chart or graph.

• Page-level filter: Modifies all the charts on one page.

• Report-level filter: Modifies every page within the report.

• Slicer: A graphical element that people can click to narrow down data.

• Drillthrough filter: Enables you to proceed to a different page with more details.

• Cross filter/highlight: Highlights data on clicking on visuals

11. What are the various methods of connecting in Power BI?

• Import: Data is duplicated and stored in Power BI.

• DirectQuery: Data does not move and is used right away.

• Live Connection: Analysis Services (SSAS) data is not cached in Power BI.

• Composite Model: Import and DirectQuery Integration.

12. Does Power BI accommodate Excel files?

Yes. You can connect Power BI to Excel files and bring in tables, named ranges, and even data models.

13. Can Power BI display data in real-time?

Yes. Power BI can present live updates through tools such as Azure Stream Analytics, streaming data, or REST APIs.

14. What is the Power BI Service?

It is Power BI in the cloud. You can use it to browse, share, and collaborate on reports and dashboards through a web browser.

15. Why is Power BI Desktop used?

Power BI Desktop is a free computer software. You may use it to make reports and dashboards. Developers and analysts use it before they publish their work on the internet.

How to obtain Power BI certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

CONCLUSION

Power BI is a potent device in data conversion into insights through the use of reports and dashboards. Preparation of interviews through the study of key concepts, tools, and features may assist you in securing employment in the data sector. With growing demand and decent salary, Power BI is a great career choice.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

Read More

Power BI is a common tool for data analysis and creating visual reports. It assists individuals in transforming intricate data into understandable and actionable insights in the form of dashboards and reports. As more businesses are reliant on data for making effective decisions, demand for Power BI experts is rising.

Basic Power BI Interview Questions

1. What is Power BI?

Power BI is a tool created by Microsoft that helps you work with data. You can bring in data from a large number of sources, clean it up, and build charts, graphs, and dashboards. You can use the visuals to uncover insights and make informed decisions. Access Power BI on your computer (Desktop), online via the cloud (Service), or through your smartphone (Mobile app).

2. What are the key components of Power BI?

Power BI has numerous tools by which you can work with data at ease. These are the primary components:

• Power Query: It assists you in retrieving data from various sources such as Excel, databases, or text files. You can also clean and shape the data to make it more usable.

• Power View: This enables you to create charts, tables, and graphs. It presents your data in a clear and fun manner, and you can filter to emphasize certain sections of the data.

• Power BI Desktop: It is a desktop tool where you can combine Power Query, Power View, and other features. It assists you in making reports and dashboards to better understand your data.

• Power BI Mobile: This is a mobile app available for Android, iOS, and Windows devices. It enables you to browse and share your reports and dashboards wherever you are.

• Power Map: This data visualization tool displays your data on a 3D map. You can see where the data is coming from, using locations like cities, states, or countries.

• Power Q&A: This feature lets you ask questions in simple English and receive answers instantly. It also gives you your replies in graphs or chart form, which is easier to understand.

3. What are the advantages of Power BI?

Power BI has numerous advantages:

• It is able to process a lot of data.

• You can create images, graphs, and charts from data using pre-formatted templates.

• It incorporates intelligent technology that gets better with frequent updates.

• You can even create your own dashboards to read and use.

• You can ask questions or do math in a unique language known as DAX.

4. What are the key differences between Power BI and Tableau?

Feature Power BI abd Tableau

Ease of Use

- Simpler to learn, particularly if you're used to Excel. Drag-and-drop functionality.

- Slightly harder to master, but gives more control over appearance.

Pricing

- Lower. Power BI Desktop is free; Pro is ~$10/month.

- Higher. Tableau Creator is ~$70/month.

- Power BI connects smoothly with Microsoft applications like Excel, Teams, and SharePoint. It integrates with most tools, but not as well with Microsoft.

Visuals

- Good visuals with interaction possibilities; there are also custom visuals.

- Better for intricate, custom, and high-end visuals.

- Performance is good with small and medium-sized data. It is able to process large data sets if configured properly.

Deployment

- Cloud-first, but you can host on your own servers too.

- Can be hosted on the cloud, on your servers, or online.

- Backed by an active Microsoft community and frequent feature updates. Also has a long-standing and active user base.

5. What is Power Query?

Power Query is something that assists you:

• Collect data from different sources

• Sanitize and update the data

• Integrate information from various places.

• Import the data into Power BI to visualize.

6. What is DAX?

DAX stands for Data Analysis Expressions. It is a formula language that is proprietary to Power BI. DAX enables you to:

• Learn complex math.

• Create your own tables and columns

• Formulate special reports using math and logic.

7. How is the attitude of people towards Power BI Desktop?

Power BI Desktop has four main views:

• Report View: Create reports and charts with graphics.

• Table View: View your data in a table (rows and columns) to sort, filter, or edit.

• Data View: Look at your real data carefully.

• Model View: Join different tables, calculate fields, and organize your data model.

8. In what way is a calculated column different from a measure?

• Calculated Column: This adds a new column to your table. It is calculated for each row when the data loads.

• Measure: A calculation only made when required, usually presented in charts or graphs. It is faster for large amounts or averages.

9. What is a Power BI dashboard?

A dashboard is a single page holding charts and graphics of multiple reports. It is a snapshot of key numbers (KPIs) and can be viewed only in Power BI Service (web version).

10. What are the filter types in Power BI?

• Visual-level filter: Changes a single chart or graph.

• Page-level filter: Modifies all the charts on one page.

• Report-level filter: Modifies every page within the report.

• Slicer: A graphical element that people can click to narrow down data.

• Drillthrough filter: Enables you to proceed to a different page with more details.

• Cross filter/highlight: Highlights data on clicking on visuals

11. What are the various methods of connecting in Power BI?

• Import: Data is duplicated and stored in Power BI.

• DirectQuery: Data does not move and is used right away.

• Live Connection: Analysis Services (SSAS) data is not cached in Power BI.

• Composite Model: Import and DirectQuery Integration.

12. Does Power BI accommodate Excel files?

Yes. You can connect Power BI to Excel files and bring in tables, named ranges, and even data models.

13. Can Power BI display data in real-time?

Yes. Power BI can present live updates through tools such as Azure Stream Analytics, streaming data, or REST APIs.

14. What is the Power BI Service?

It is Power BI in the cloud. You can use it to browse, share, and collaborate on reports and dashboards through a web browser.

15. Why is Power BI Desktop used?

Power BI Desktop is a free computer software. You may use it to make reports and dashboards. Developers and analysts use it before they publish their work on the internet.

How to obtain Power BI certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

CONCLUSION

Power BI is a potent device in data conversion into insights through the use of reports and dashboards. Preparation of interviews through the study of key concepts, tools, and features may assist you in securing employment in the data sector. With growing demand and decent salary, Power BI is a great career choice.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

Important Soft Skills for Data Scientists

Data science is not only mathematics, statistics, or programming. It also needs imagination, creativity, and vision. These skills enable data scientists to make difficult choices and make business choices based on a great amount of data.

Passion can be such a struggle when it comes to data science. People who want to be data scientists need more than math and programming skills—they need good communication skills as well, in order to excel in their career.

Why Data Scientists Require Soft Skills ?

Google conducted studies to discover what the greatest teams at work are. They discovered that the greatest teams were not teams of the most brilliant technical specialists. Rather, the greatest teams were teams of individuals who could communicate, empathize with others, and lead empathetically.

Top Soft Skills for Data Scientists

When people use the term data scientist, they automatically assume that it's a person who's excellent in math, programming, and computer use. These are useful skills, but there is another aspect to being an excellent data scientist that is equally relevant — soft skills. Soft skills are the social and personal skills that enable data scientists to collaborate effectively with others, think logically, and make reasonable judgments when solving problems. Let's discuss some of the most critical soft skills that every data scientist must be excellent at.

1. Communication

One of the key responsibilities of a data scientist is to make sense of what their data is. They need to communicate what they discover to various kinds of people. At times they need to explain to other data scientists and IT professionals who are technically inclined. But in many cases they need to explain to people who are not highly data-literate, like business managers or clients.

2. Curiosity

Curiosity is having a desire to learn and discover. It is being an unrelenting detective who keeps asking questions. A curious data scientist will not take the first answer. They will probe deeper to discover any underlying surprises or trends in the data. This is very crucial because the best discoveries are sometimes made by looking beyond what is apparent.

3. Understanding Business

Data science is not just numbers; it is also about making a business successful. Due to this, data scientists need to know how businesses run. Data scientists need to know what the business is selling, who the customers are, and what the business wishes to accomplish.

4. Storytelling

Numbers alone don't interpret well. We don't necessarily understand everything from looking at numbers or graphs. That is where story comes in. Storytelling is taking data and placing it within a normal, relatable story that tells us what is going on and why we should care.

Good storytelling gets people to care about what the numbers are telling them. Instead of just reporting "sales increased 10%," for example, a data scientist could say that sales increased because a new ad campaign paid off, or because a new product was a hit. This gets managers and teams to know what to do next.

5. Adaptability

The technology and business field is constantly evolving. Every day, new tools, techniques, and issues crop up. Due to this, data scientists must be adaptable. Being adaptable is being willing to learn something new and shift the way you do things when needed.

6. Critical Thinking

Critical thinking is thinking hard and making the right decisions. Data scientists get a lot of data, but not all of it is accurate or helpful. They must carefully examine the data to discover mistakes or holes.

7. Product Understanding

Most data scientists are assigned projects for specific products or services. Having knowledge about the product aids them in creating better solutions. For instance, if a data scientist is in a company that produces video games, having knowledge about how the users enjoy the game or what features they use the most assists the scientist in examining the correct data.

8. Team Player

Data scientists are not always solo players. They usually collaborate with other data scientists, engineers, managers, and business professionals. A team player means that you are compatible with others, you exchange ideas, listen to ideas, and help the team work well.

How to Enhance Soft Skills for a Data Scientist Position

Among the ways of building your soft skills are:

• Learn online: Acquire new skills like project management or negotiation.

• Request feedback: Discover what you excel at and where you can improve by asking your coworkers or friends.

• Find a coach: A coach can help you get better at the skills you want to improve more quickly.

• Practice in front of a friend: Practice asking for a raise or promotion, and have a friend play the role of your boss and provide feedback.

The Real Benefits of Soft Skills

Soft skills are easy to acquire. A factory learned that imparting soft skills made its workers much more efficient and earned them a tremendous amount of profit. Soft skills enable individuals to work more efficiently, make sound judgments, and get more work done.

Even more repetitive work will be done by machines in the future, and humans will be doing more valuable work such as engaging with customers, making improved products, and running machines. Soft skills will be of tremendous value to such jobs.

How to obtain certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Soft skills are equally essential as technical skills for data scientists. They enable one to work well with others and make well-informed decisions while solving problems. Growth in these skills can result in a successful and fulfilling career.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Read More

Data science is not only mathematics, statistics, or programming. It also needs imagination, creativity, and vision. These skills enable data scientists to make difficult choices and make business choices based on a great amount of data.

Passion can be such a struggle when it comes to data science. People who want to be data scientists need more than math and programming skills—they need good communication skills as well, in order to excel in their career.

Why Data Scientists Require Soft Skills ?

Google conducted studies to discover what the greatest teams at work are. They discovered that the greatest teams were not teams of the most brilliant technical specialists. Rather, the greatest teams were teams of individuals who could communicate, empathize with others, and lead empathetically.

Top Soft Skills for Data Scientists

When people use the term data scientist, they automatically assume that it's a person who's excellent in math, programming, and computer use. These are useful skills, but there is another aspect to being an excellent data scientist that is equally relevant — soft skills. Soft skills are the social and personal skills that enable data scientists to collaborate effectively with others, think logically, and make reasonable judgments when solving problems. Let's discuss some of the most critical soft skills that every data scientist must be excellent at.

1. Communication

One of the key responsibilities of a data scientist is to make sense of what their data is. They need to communicate what they discover to various kinds of people. At times they need to explain to other data scientists and IT professionals who are technically inclined. But in many cases they need to explain to people who are not highly data-literate, like business managers or clients.

2. Curiosity

Curiosity is having a desire to learn and discover. It is being an unrelenting detective who keeps asking questions. A curious data scientist will not take the first answer. They will probe deeper to discover any underlying surprises or trends in the data. This is very crucial because the best discoveries are sometimes made by looking beyond what is apparent.

3. Understanding Business

Data science is not just numbers; it is also about making a business successful. Due to this, data scientists need to know how businesses run. Data scientists need to know what the business is selling, who the customers are, and what the business wishes to accomplish.

4. Storytelling

Numbers alone don't interpret well. We don't necessarily understand everything from looking at numbers or graphs. That is where story comes in. Storytelling is taking data and placing it within a normal, relatable story that tells us what is going on and why we should care.

Good storytelling gets people to care about what the numbers are telling them. Instead of just reporting "sales increased 10%," for example, a data scientist could say that sales increased because a new ad campaign paid off, or because a new product was a hit. This gets managers and teams to know what to do next.

5. Adaptability

The technology and business field is constantly evolving. Every day, new tools, techniques, and issues crop up. Due to this, data scientists must be adaptable. Being adaptable is being willing to learn something new and shift the way you do things when needed.

6. Critical Thinking

Critical thinking is thinking hard and making the right decisions. Data scientists get a lot of data, but not all of it is accurate or helpful. They must carefully examine the data to discover mistakes or holes.

7. Product Understanding

Most data scientists are assigned projects for specific products or services. Having knowledge about the product aids them in creating better solutions. For instance, if a data scientist is in a company that produces video games, having knowledge about how the users enjoy the game or what features they use the most assists the scientist in examining the correct data.

8. Team Player

Data scientists are not always solo players. They usually collaborate with other data scientists, engineers, managers, and business professionals. A team player means that you are compatible with others, you exchange ideas, listen to ideas, and help the team work well.

How to Enhance Soft Skills for a Data Scientist Position

Among the ways of building your soft skills are:

• Learn online: Acquire new skills like project management or negotiation.

• Request feedback: Discover what you excel at and where you can improve by asking your coworkers or friends.

• Find a coach: A coach can help you get better at the skills you want to improve more quickly.

• Practice in front of a friend: Practice asking for a raise or promotion, and have a friend play the role of your boss and provide feedback.

The Real Benefits of Soft Skills

Soft skills are easy to acquire. A factory learned that imparting soft skills made its workers much more efficient and earned them a tremendous amount of profit. Soft skills enable individuals to work more efficiently, make sound judgments, and get more work done.

Even more repetitive work will be done by machines in the future, and humans will be doing more valuable work such as engaging with customers, making improved products, and running machines. Soft skills will be of tremendous value to such jobs.

How to obtain certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Soft skills are equally essential as technical skills for data scientists. They enable one to work well with others and make well-informed decisions while solving problems. Growth in these skills can result in a successful and fulfilling career.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Discover the Benefits of Descriptive Analytics

Most businesses today utilize data to enable them to make more informed decisions. By examining figures and data, business executives can observe patterns and trends that inform them in which direction things are heading. This enables them to do things better and post improved results. When individuals are provided with more data, they are able to make more informed decisions.

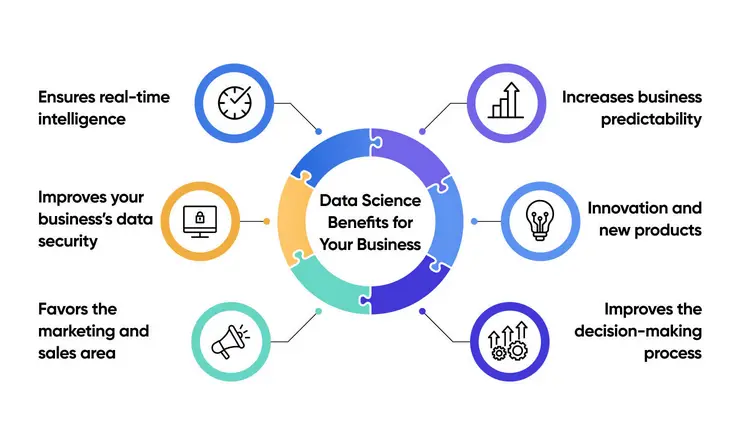

What is descriptive analytics?

There are four primary categories of business analytics:

• Descriptive Analytics – What occurred?

• Diagnostic Analytics – Why did it happen?

• Predictive Analytics – What's next?

• Prescriptive Analytics – What do we do about it?

Descriptive analytics is the most prevalent and simplest form. It analyzes historical and existing data to demonstrate what has occurred in a firm. It assists companies in spotting tendencies and tracking their performance.

With descriptive analytics, companies create reports and scorecards (e.g., KPIs – Key Performance Indicators) to quantify such as customer behavior, spending, and sales. They use them to view how they are performing.

A prime example is social media. Companies utilize descriptive analytics to analyze how their posts are performing. They are tracking likes, shares, new followers, and how much money they are generating on social media. This allows them to determine which posts or campaigns are performing and which are not.

More Examples

Descriptive analytics also help us to:

• How much profit or loss the company is making

• Inventory and product movement

• What customers complain about in surveys

• How fast things get through the supply chain

• Which bills must be paid or collected

Descriptive analytics can seem daunting, but it is only a means of comprehending something that already exists. This assists businesses in making better decisions in the future.

What Can Descriptive Analytics Teach Us?

Descriptive analytics assists companies in looking where they are headed, where they currently are, and where they are relative to others. There is more to it than that, however. Then what else can they discover from it?

1. How well the company is currently doing.

It tracks significant numbers for departments, teams, and the business as a whole. For instance, it could be showing one salesperson's performance this quarter or what product is selling best.

2. Trends from the Past

It gathers data over time so that companies can compare things. For instance, they can compare sales this quarter with sales last quarter to check whether things are getting better. They tend to use graphs to facilitate such comparisons to be easily understandable.

3. Strengths and Weaknesses

It enables companies to know what is going right and what is going wrong. They can compare their performance with other companies or industry standards. This informs them where they are performing better and where they should improve.

How Does Descriptive Analytics Work

There are five crucial steps:

Step 1: Determine What to Monitor

Firms first decide what they would like to measure. If they want to expand, they may consider how much they earn every quarter. The unit that bills may observe how rapidly customers make payments.

Step 2: Search for the Appropriate Data

Secondly, they have to gather the correct data. This might be difficult since the data may be located in various places. Some businesses use specialized software such as ERP systems to assist in keeping everything in order. They may also require data from websites or social media.

Step 3: Prepare the Data

They have to clean and merge the data before it can be used. This involves correcting errors and having everything in the right format. Occasionally, they employ something known as data modeling to assist in getting everything in an easier-to-understand format.

Step 4: Analyze the Data

After the data is prepared, they feed it into a computer program using applications such as Excel or using special programs. They will make simple calculations such as totals, averages, or other simple math to figure out what is going on. A manager might need to know how much money they earned this month, for instance.

Step 5: Display the Results

Finally, they present the results so others can understand. Some people like charts or graphs, while others prefer tables and numbers. The goal is to make the data clear and useful for everyone.

What are the advantages of Descriptive Analytics

Below are some of the benefits of using descriptive analytics:

- It is easy to use and get the hang of. You don't need to be a genius at math to work it.

- There are several handy tools. Several tools do the hard work for you.

- It answers common questions. For example, "How are we doing?" or "What should we change?"

- But it is not flawless. There are two errors here:

- It is basic. It only looks at simple relationships between a few things.

- It does not inform us why. It informs us what, but not why or what may be coming next.

Descriptive, Predictive, and Prescriptive Analytics:

What's the Difference?

There are three primary types of analytics used in business and data analysis that assist one in comprehending data and making conclusions. They include Descriptive, Predictive, and Prescriptive analytics. Each serves a unique purpose and provides a different answer.

1. Descriptive Analytics – What happened?

Descriptive analytics examines historical and current facts with the purpose of describing what has occurred within a business. It makes individuals consider trends, patterns, and what is happening at the moment.

Example:

- A shop has looked at 6 months' worth of sales to see what was selling most.

Important points:

•Utilizes reports, charts, and dashboards.

• Indicates previous performance

• Supports monitoring progress and finding trends

2. Predictive Analytics – What could happen next?

Predictive analytics uses data and mathematics to predict what will happen in the future. It examines past trends and makes future occurrences or outcomes predictions from them.

Example:

An online retailer forecasts which shoppers are most likely to purchase something during a holiday sale based on their history.

Major sections:

• Uses statistics and machine learning

• Helps plan ahead

• Identifies risks and opportunities

3. Prescriptive Analytics – What do we do about it?

Prescriptive analytics takes it a step further. It not just tells you what will happen; it tells you what you should do next. It assists individuals in making the optimal decision based on several possible scenarios that can occur.

Example:

A delivery business applies data to determine the lowest cost and most efficient path for drivers, factoring in traffic and fuel costs.

Main points:

• Best options advised

• Supports decision-making

• Solves complicated problems with numerous options

In conclusion:

• Descriptive = Informs you of what occurred in the past.

• Predictive means it looks forward to tell us what will happen.

• Prescriptive because it informs a person what to do next.

How to obtain certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Descriptive, predictive, and prescriptive analytics help businesses observe the past, predict the future, and make smart choices. All of them have a unique role to play in data-driven success. iCert Global offers training to help you master these skills and excel in your professional life.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Read More

Most businesses today utilize data to enable them to make more informed decisions. By examining figures and data, business executives can observe patterns and trends that inform them in which direction things are heading. This enables them to do things better and post improved results. When individuals are provided with more data, they are able to make more informed decisions.

What is descriptive analytics?

There are four primary categories of business analytics:

• Descriptive Analytics – What occurred?

• Diagnostic Analytics – Why did it happen?

• Predictive Analytics – What's next?

• Prescriptive Analytics – What do we do about it?

Descriptive analytics is the most prevalent and simplest form. It analyzes historical and existing data to demonstrate what has occurred in a firm. It assists companies in spotting tendencies and tracking their performance.

With descriptive analytics, companies create reports and scorecards (e.g., KPIs – Key Performance Indicators) to quantify such as customer behavior, spending, and sales. They use them to view how they are performing.

A prime example is social media. Companies utilize descriptive analytics to analyze how their posts are performing. They are tracking likes, shares, new followers, and how much money they are generating on social media. This allows them to determine which posts or campaigns are performing and which are not.

More Examples

Descriptive analytics also help us to:

• How much profit or loss the company is making

• Inventory and product movement

• What customers complain about in surveys

• How fast things get through the supply chain

• Which bills must be paid or collected

Descriptive analytics can seem daunting, but it is only a means of comprehending something that already exists. This assists businesses in making better decisions in the future.

What Can Descriptive Analytics Teach Us?

Descriptive analytics assists companies in looking where they are headed, where they currently are, and where they are relative to others. There is more to it than that, however. Then what else can they discover from it?

1. How well the company is currently doing.

It tracks significant numbers for departments, teams, and the business as a whole. For instance, it could be showing one salesperson's performance this quarter or what product is selling best.

2. Trends from the Past

It gathers data over time so that companies can compare things. For instance, they can compare sales this quarter with sales last quarter to check whether things are getting better. They tend to use graphs to facilitate such comparisons to be easily understandable.

3. Strengths and Weaknesses

It enables companies to know what is going right and what is going wrong. They can compare their performance with other companies or industry standards. This informs them where they are performing better and where they should improve.

How Does Descriptive Analytics Work

There are five crucial steps:

Step 1: Determine What to Monitor

Firms first decide what they would like to measure. If they want to expand, they may consider how much they earn every quarter. The unit that bills may observe how rapidly customers make payments.

Step 2: Search for the Appropriate Data

Secondly, they have to gather the correct data. This might be difficult since the data may be located in various places. Some businesses use specialized software such as ERP systems to assist in keeping everything in order. They may also require data from websites or social media.

Step 3: Prepare the Data

They have to clean and merge the data before it can be used. This involves correcting errors and having everything in the right format. Occasionally, they employ something known as data modeling to assist in getting everything in an easier-to-understand format.

Step 4: Analyze the Data

After the data is prepared, they feed it into a computer program using applications such as Excel or using special programs. They will make simple calculations such as totals, averages, or other simple math to figure out what is going on. A manager might need to know how much money they earned this month, for instance.

Step 5: Display the Results

Finally, they present the results so others can understand. Some people like charts or graphs, while others prefer tables and numbers. The goal is to make the data clear and useful for everyone.

What are the advantages of Descriptive Analytics

Below are some of the benefits of using descriptive analytics:

- It is easy to use and get the hang of. You don't need to be a genius at math to work it.

- There are several handy tools. Several tools do the hard work for you.

- It answers common questions. For example, "How are we doing?" or "What should we change?"

- But it is not flawless. There are two errors here:

- It is basic. It only looks at simple relationships between a few things.

- It does not inform us why. It informs us what, but not why or what may be coming next.

Descriptive, Predictive, and Prescriptive Analytics:

What's the Difference?

There are three primary types of analytics used in business and data analysis that assist one in comprehending data and making conclusions. They include Descriptive, Predictive, and Prescriptive analytics. Each serves a unique purpose and provides a different answer.

1. Descriptive Analytics – What happened?

Descriptive analytics examines historical and current facts with the purpose of describing what has occurred within a business. It makes individuals consider trends, patterns, and what is happening at the moment.

Example:

- A shop has looked at 6 months' worth of sales to see what was selling most.

Important points:

•Utilizes reports, charts, and dashboards.

• Indicates previous performance

• Supports monitoring progress and finding trends

2. Predictive Analytics – What could happen next?

Predictive analytics uses data and mathematics to predict what will happen in the future. It examines past trends and makes future occurrences or outcomes predictions from them.

Example:

An online retailer forecasts which shoppers are most likely to purchase something during a holiday sale based on their history.

Major sections:

• Uses statistics and machine learning

• Helps plan ahead

• Identifies risks and opportunities

3. Prescriptive Analytics – What do we do about it?

Prescriptive analytics takes it a step further. It not just tells you what will happen; it tells you what you should do next. It assists individuals in making the optimal decision based on several possible scenarios that can occur.

Example:

A delivery business applies data to determine the lowest cost and most efficient path for drivers, factoring in traffic and fuel costs.

Main points:

• Best options advised

• Supports decision-making

• Solves complicated problems with numerous options

In conclusion:

• Descriptive = Informs you of what occurred in the past.

• Predictive means it looks forward to tell us what will happen.

• Prescriptive because it informs a person what to do next.

How to obtain certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Descriptive, predictive, and prescriptive analytics help businesses observe the past, predict the future, and make smart choices. All of them have a unique role to play in data-driven success. iCert Global offers training to help you master these skills and excel in your professional life.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Best Languages for Data Science Beginners and Pros

If you aspire to be a data scientist, you need to learn some computer languages. There is no single language that can do everything. That is why it is required to learn more than one language. Learning the appropriate languages makes you more efficient in solving data science issues. These are required frequently. For instance, Python was extremely popular during the 2010s when data science was growing. In an Indeed study, it was discovered that data science and Python skills from 2014 to 2019 were the key to kick-start a tech career in 2020.

Things to Keep in Mind Before Selecting a Programming Language:

• What kind of work will you perform with information?

• How does your organization leverage data science?

• What does your business want to accomplish?

• What are your own career aspirations?

• Languages you can speak?

• How hard are you willing to work to learn more?

Most Used Programming Languages for Data Science ?

Python

Python is the most significant data science language. This will hold true for the next five years at least. If you understand Python and how to fix problems with numbers and experiments, you will be great at this profession.

Python is a special and flexible programming language. People use it in many ways, like:

• Using tools like NumPy and SciPy to work with numbers and data

• Building websites with Django and Flask

• Organizing and managing information

• Making smart programs using machine learning, like decision trees

R Programming

R is another powerful language for data science. It became popular very quickly.

R is great at working with numbers and making graphs. It also has over 8,000 helpful tools created by people around the world. Statisticians use R to make predictions and create graphs.

It is supported in machine learning by packages like Gmodels, RODBC, TM, and Class in building smart systems. R is also a good option if you are comfortable writing research reports.

Java

Java is a widely used programming language that is over 30 years old. Java is applied in developing desktop applications, web applications, and mobile applications. Java executes on a platform known as JVM (Java Virtual Machine) that enables it to execute on various computers.

Most big companies prefer using Java since it is simple to add features on their project without slowing them down. That is why it is commonly applied in big machine learning frameworks.

JavaScript

JavaScript is a popular programming language. It was initially used to render websites interactive and engaging. But over time, it grew stronger with ReactJS, AngularJS, and NodeJS. Now, you can utilize JavaScript to create the front (users' interface) and back (their functionality) ends of websites.

SAS (Statistical Analysis System)

SAS is a data and number-working device, and it is appropriate for activities like business reports, forecasting, and data analysis. SAS has been in existence since 1976, and the majority of people in the world of data trust it.

Scala

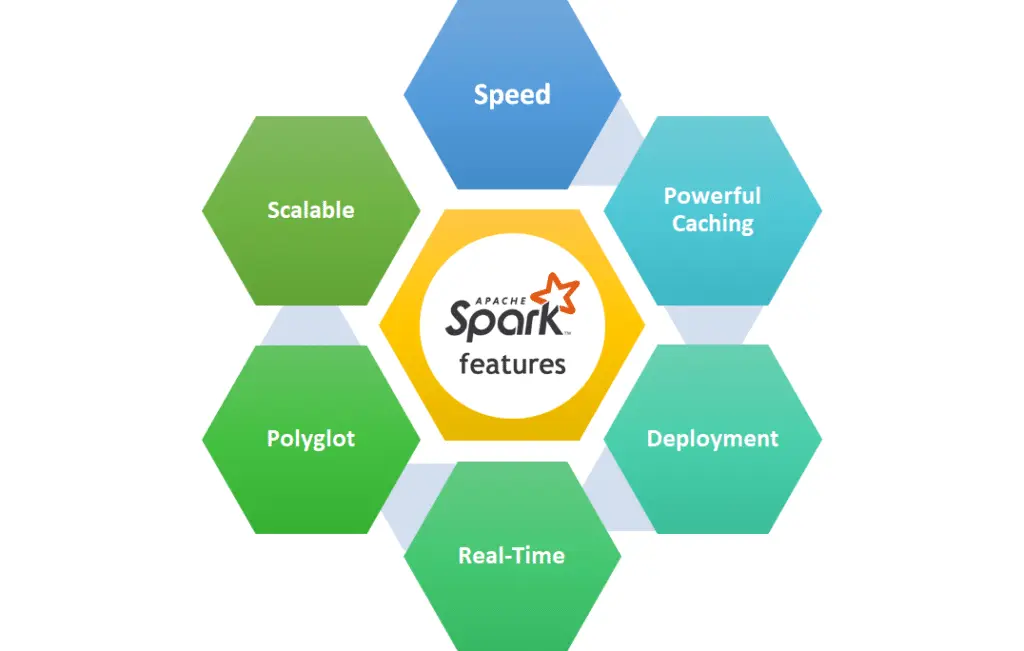

Scala is also a Java-compatible language. It can be applied to big projects with a lot of data. Scala is usually used with a system named Apache Spark, which assists in the data task distribution and executing them with high speed.

TensorFlow

TensorFlow is a very useful math and machine learning program. It assists you in managing enormous amounts of data. You can split your work into a number of small works and execute them simultaneously on a number of computer chips (CPUs and GPUs). This accelerates training large computer systems (neural networks) significantly.

C#

C# (pronounced "C-sharp") was developed by Microsoft. It is 20 years old. C# is similar to Java but with some new and helpful functionalities. Microsoft simplified data science with C# by including Hadoop support and making ML.NET, a tool for assisting you in creating intelligent applications for different computer systems.

Ruby

Ruby is also a language that is frequently used to deal with text. It is also used by developers to experiment with ideas, build servers, and perform mundane tasks. Ruby is not as widely used in data science as Python or R, but it has some useful tools nonetheless.

For data science, Ruby can be used with:

• iruby – allows you to run Ruby in Jupyter notebooks.

• rserve-client – allows Ruby to communicate with R, another data tool.

• Jongleur – helps to organize and change data.

• Rb-gsl – offers access to mathematical functions in the GNU Scientific Library

Languages Used for Programming

Before you select a programming language, think about what you do. For example, financial folks use R to read stock market data and predict prices. In stores or online, computer programmers use Python to develop programs that suggest products to shoppers.

How to obtain Data Science certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Choosing the appropriate programming language can help you progress in your data science career. Keep your goals, work needs, and what recruiters need in mind. Keep learning and enhancing abilities. iCert Global offers easy data science courses that make you successful.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Read More

If you aspire to be a data scientist, you need to learn some computer languages. There is no single language that can do everything. That is why it is required to learn more than one language. Learning the appropriate languages makes you more efficient in solving data science issues. These are required frequently. For instance, Python was extremely popular during the 2010s when data science was growing. In an Indeed study, it was discovered that data science and Python skills from 2014 to 2019 were the key to kick-start a tech career in 2020.

Things to Keep in Mind Before Selecting a Programming Language:

• What kind of work will you perform with information?

• How does your organization leverage data science?

• What does your business want to accomplish?

• What are your own career aspirations?

• Languages you can speak?

• How hard are you willing to work to learn more?

Most Used Programming Languages for Data Science ?

Python

Python is the most significant data science language. This will hold true for the next five years at least. If you understand Python and how to fix problems with numbers and experiments, you will be great at this profession.

Python is a special and flexible programming language. People use it in many ways, like:

• Using tools like NumPy and SciPy to work with numbers and data

• Building websites with Django and Flask

• Organizing and managing information

• Making smart programs using machine learning, like decision trees

R Programming

R is another powerful language for data science. It became popular very quickly.

R is great at working with numbers and making graphs. It also has over 8,000 helpful tools created by people around the world. Statisticians use R to make predictions and create graphs.

It is supported in machine learning by packages like Gmodels, RODBC, TM, and Class in building smart systems. R is also a good option if you are comfortable writing research reports.

Java